Using AI, continuous glucose monitors, and an equity approach, diabetes care could be saving many more lives, Stanford Medicine researchers say.

Category: Ethics

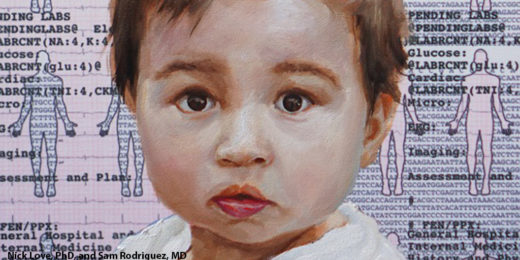

Inequity of genetic screening: DNA tests fail non-white families more often

Research is showing that advanced methods of genetic testing aren’t equally useful for everyone: They’re less accurate for non-white families, raising concerns about how historical gaps in whose DNA gets studied produce inequities in medical care.

Researchers seek healthy checks and balances for how products are designed

With such conveniences as digital devices at our fingertips comes a messy health conundrum, say Stanford Medicine researchers.

AI, medicine and race: Why ending ‘structural racism’ in health care now is crucial

Health care providers must reckon with inherent race-based biases in medicine, which can reinforce false stereotypes in algorithms and lead to improper treatment recommendations or late diagnoses.

New policy is taking sexual orientation, gender out of blood donor equation

New guidelines will continue to ensure the safety of the nation's blood supply, according to the Food and Drug Administration.

How to regulate AI? Bioethicist David Magnus on medicine’s critical moment

The applications for AI in medicine are being explored deeply at Stanford Medicine and elsewhere. Putting guardrails in place now is crucial.

Rethinking large language models in medicine

Stanford Medicine researchers and leaders discuss the need for medical and health professionals to shape the creation of large language models.

Bringing principles of ethics to AI and drug design

Researchers discuss the need for ethics and its integration into research projects that harness artificial intelligence.

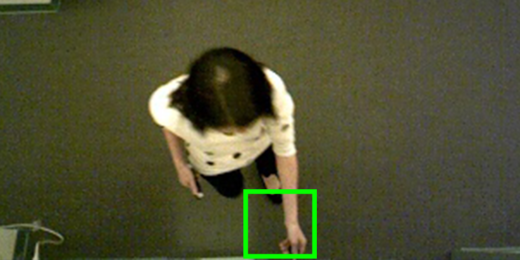

When AI is watching patient care: Ethics to consider

Ethical and legal issues accompany the potential benefits of using computer vision-based ambient intelligence in health care.

Financial transparency may diminish trust in doctors, new study finds

A Stanford study has found that mandated public disclosure of physicians' financial ties may have diminished trust in all physicians.

Inheritance: On family secrets, genetics and ethics

With a DNA test, Dani Shapiro discovered that the man she had thought was her father was not. She discussed the finding, and her writing, on campus.

In the Spotlight: At the intersection of tech, health, and ethics

Nicole Martinez-Martin, a postdoctoral fellow in biomedical ethics, shares her experiences in the realms of teaching, law, and health in this In the Spotlight Q&A.

The future of ethics and biomedicine: An interview

In this radio show, Stanford bioethicist David Magnus and host Russ Altman discuss the ethical implications of using AI in health care.

Considering the challenges posed by technology that tracks whether you took your meds

Digital medicine advances prompt call for more study about potential implications and ethical issues for patients and clinicians.

Journal editor aims to prompt thoughtful review of ethics in precision health

Stanford medical student Jason Neil Batten edits an ethics in precision health journal issue for the American Medical Association's Journal of Ethics.

Scientific publishing: How much is too much?

John Ioannidis reflects on the phenomenon of "hyper-publishing," where certain scientists are listed as authors on scores of papers a year.